Nearly every role in business is heavily reliant on data on a daily basis. Whether it is marketing, operations, finance, or analytics, everyone’s role depends upon having reliable and consistent data to work with. The data landscape today consists of countless sources, and it is important for every organization to identify all relevant data, and consolidate into one place to work with. The business question then becomes, how do you get data from external applications and sources into a consolidated and usable format for your organization to utilize to make informed business decisions.

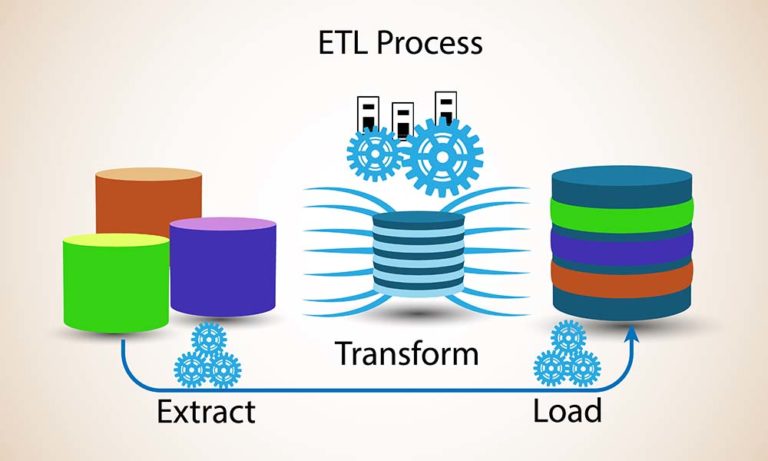

ETL (Extract, Transform, Load) is a process that enables this, and is a core building block to your data strategy and data warehouse efforts, which will provide enhanced data quality and consistency.

What is the ETL Process?

There are three main steps to the ETL process. This is applied to a wide range of data sources, anything from flat files posted to an FTP to an application API and any type of data pipeline in between. For example, Ready Signal provides streamlined integration of control data sets into ETL processes, making accessing and integrating external control data into your internal workflow simple and easy.

Step 1 Extract: Extracting data from different source systems into a staging area to analyze and QA prior integrating into the data warehouse

Step 2 Transform: Applying a defined set of rules to the raw data to convert to a standardized format ready to be integrated into the data warehouse. This ensures that all data can work together in the data warehouse. This step includes but is not limited to the following:

- Filtering the data to only provide the subset necessary for the data warehouse

- Removing unnecessary data points

- Adding new calculated fields or meta data descriptors

- Cleaning the data

- Normalizing

- Advanced data science treatments to prepare data for modeling

Step 3 Load: Transferring the transformed data from the staging area to the data warehouse to be utilized as the single source of data truth.

The ETL process is typically designed to initiate with a one-time batch load of all necessary historical data, and then set up on a defined schedule to retrieve data in real time or whenever new data from that source is available.

Common ETL Issues to Watch Out For

As with anything in life, processes involving sourcing and moving data is not without possible hiccups. The main issues organizations typically face are either related to Quality Assurance and/or scalability. First and foremost, when the ETL process is put in place, the organization needs to put the proper Quality Assurance checks in place to validate that the transformed data is still complete and correct. The last thing you want to do is to publish inaccurate data to the data warehouse. The second layer of Quality Assurance is monitoring the success of the ETL process itself. Whether you build these in-house or use a third party ETL tool, it is important to monitor the status and confirm all processes are both functioning and completing as expected. Lastly, organizations need to be aware of and manage the scalability of the ETL processes. Given the amount of big data available, the time to complete and processing power required can vary wildly depending on both the size of the data and the complexity of the transformations. For example, if there is a data set that is posted every 24 hours, but the ETL process takes 30 hours to complete, and your organization is expecting the most up to date data, that is not going to accomplish the objective, so being mindful of how the ETL process fits into your business strategy and finding ways to optimize the process to meet your business objectives is important.

Business Applications of ETL

Whether you are a data scientist, a marketer, or an executive; clean, consistent, and accessible data is important to your everyday work. The ETL process in coordination with a sound data strategy for your organization enables this. It becomes the building blocks for your organization to be prepared to have your data accessible and useful for:

- Modeling and analytics efforts

- Make the most use out of dashboarding tools,

- Provide input and insight into business forecasts

- as well as create efficiencies and time saving and avoid having to disparate resources doing this work consolidate, clean and harmonize these data manually.

Without ETL tools, it would be difficult and time consuming to pull all of your data together and make it useful for integration into your data warehouse. It is important to understand the value of these and execute them as part of your overall data strategy.